For the upcoming exhibition at the National Museum of Modern and Contemporary Art (MMCA), I researched and tested various generative models and methods on GAN inversion to create a desirable latent walk video. Here, I’ll like to share and summarize couple papers I read for this project. In particular, I investigate applications to StyleGAN. The main takeaways from these papers are:

Interpretable and controllable dimensions in the latent space of the Generative Adversarial Networks (GANs)

Requires no training and applicable with pre-trained model weights only

Introduction

Generative Adversarial Networks (GANs) are image synthesis models that can generate a variation of high-quality images.

Conventional generator in GANs takes d-dimensional latent input and maps to higher-dimensional image space. Typically, the latent inputs go through several layers or steps and transition from one space to another, at which the final space is an RGB Image space.

In StyleGAN, the output of each layer is controlled by a non-linear function of z. M is an 8-layer perceptron.

Methodologies

GANSpace: Discovering Interpretable GAN Controls

[Harkonen et al.] project page: https://github.com/harskish/ganspace

GANSpace is an unsupervised method that discovers important latent directions through Principle Component Analysis (PCA) in a latent space or a feature space of GAN.

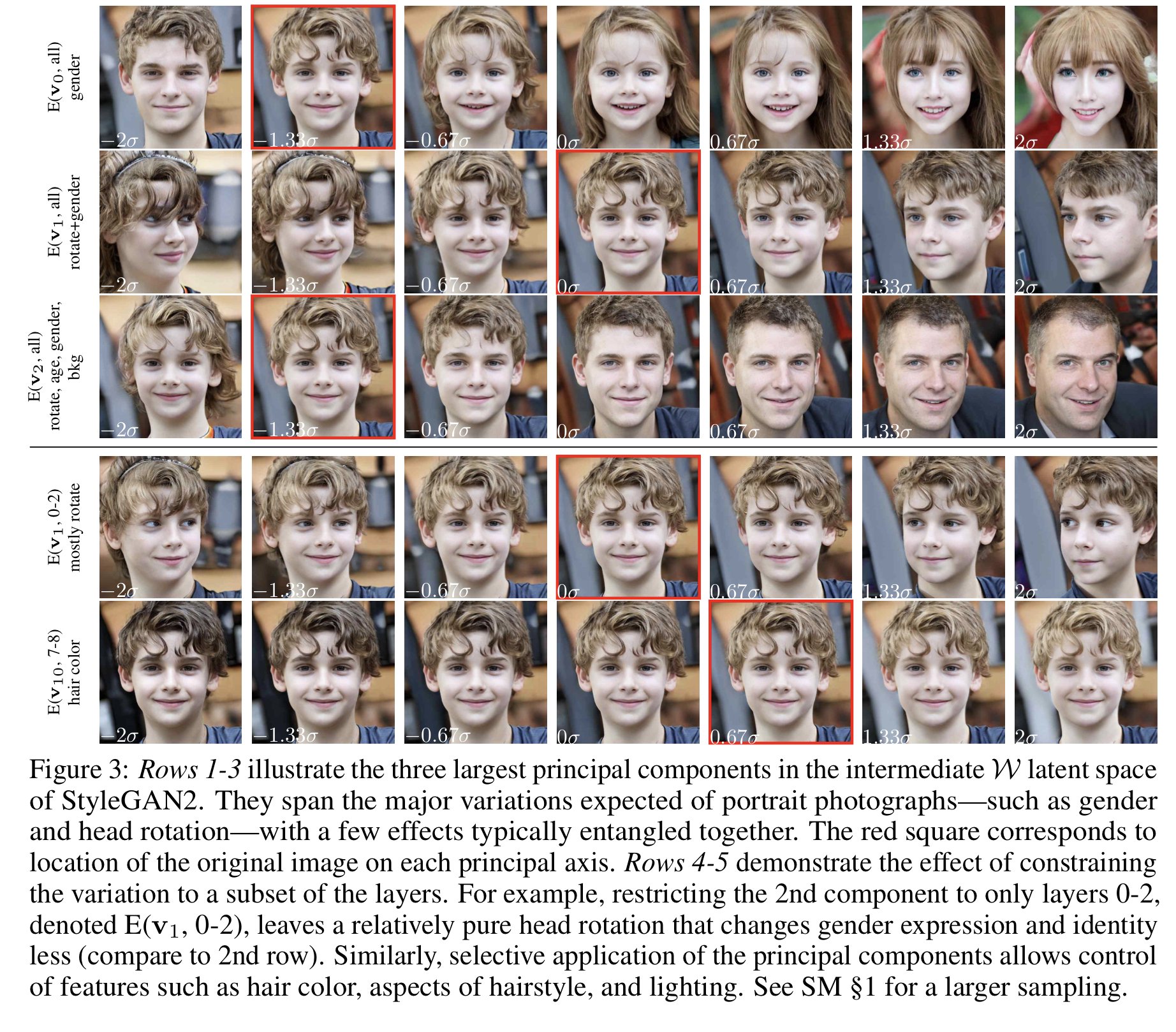

For application to StyleGAN, authors computed PCA on w values that correspond to randomly sampled N vectors of z. Then, an image defined by w can be easily edited by varying PCA coordinates x.

Next, authors show that the directions found with PCA can be further decomposed into interpretable controls.

The experimental results on layer-wise edits are shown image below. First 20 principal components affect large-scale changes such as geometric configuration and viewpoint, and other control object appearance or background and details. Authors claim that the first 100 principal components are sufficient to describe overall image appearance.

SEFA: Closed-Form Factorization of Latent Semantics in GANs.

[Shen et al.] project website: https://github.com/genforce/sefa

SEFA is an unsupervised method for learning semantic directions in a latent space. Unlike GANSpace, it requires no sampling or computing PCA.

In particular, the paper focuses on directly acting on the latent space. The authors first define the manipulation of a latent vector z as the equation below.

The image can be independently altered by linearly editing the latent code z along the identified semantic direction n. The variable, α, indicates manipulation intensity.

Edited latent code can be expanded as above, where A is a weight parameter and b is a bias parameter. This shows that the image can be easily edited by adding a term αAn to a projected code after the first layer. Therefore, the authors claim that A must contain essential information for image variation, and suggest solving the following optimization problem.

where N = [n1,n2,…nk], or k-most important directions, correspond to the top-k semantics.

By using the Lagrange mutlipler and taking the partial derivate on each n_i, the problem reduce to following equation below.

All possible solutions of the matrix AtA would be the eigenvector, or learned directions. From here, top-k eigenvectors with k largest eigenvalues are selected.

Application to StyleGAN

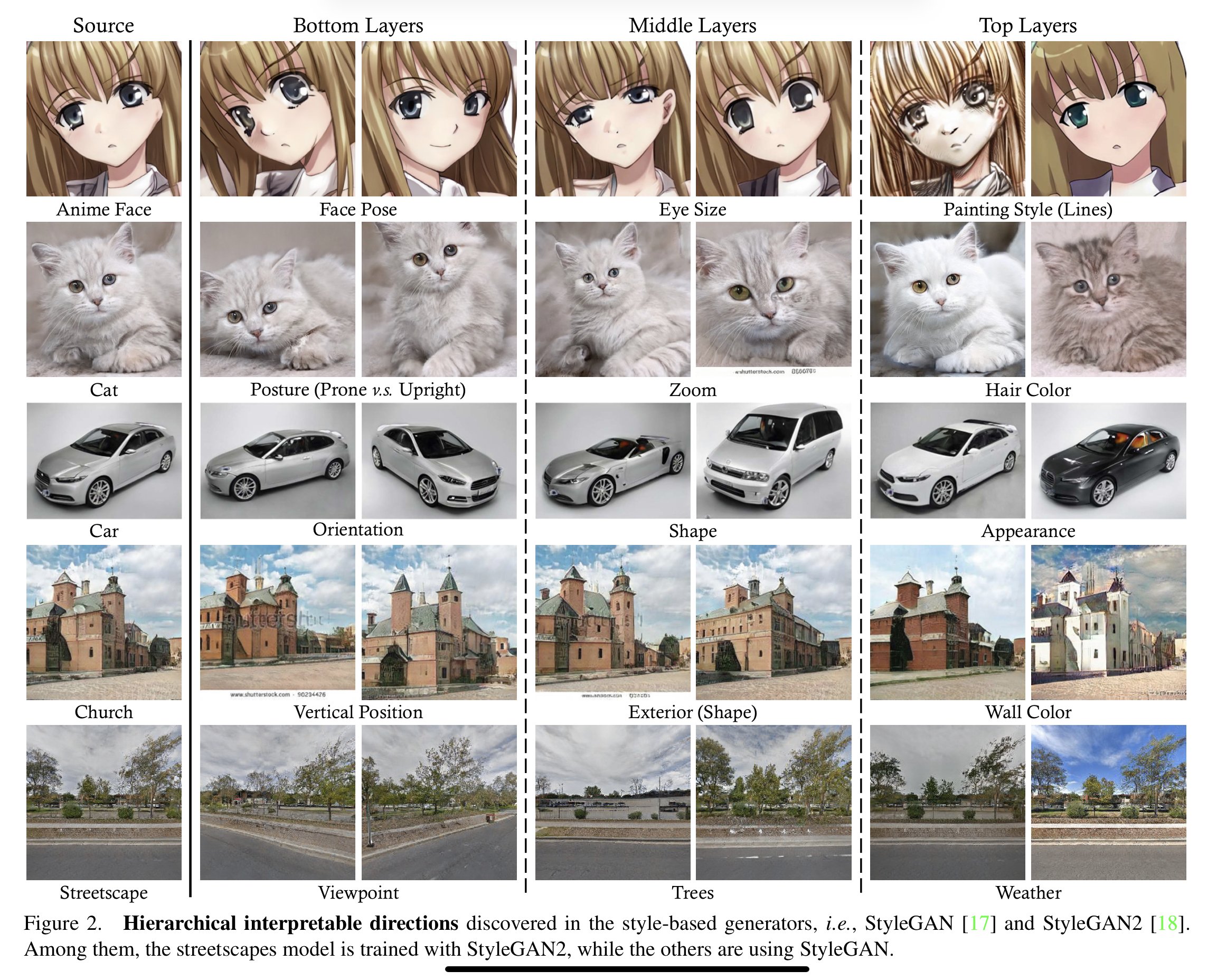

In StyleGAN, the authors examined the transformation of the latent code to the style code. Style code refers to the projected latent code after each convolution layer. To apply SEFA to StyleGAN, authors concatenate the weight parameters (A) from all target layers along the first axis, forming a larger transformation matrix.

Interestingly, results display the hierarchy of learned attributes. For example, the bottom ten layers control the rotation or pose, middle layers determine the shape, while top layers correspond to the color or the finer detail.

Sample Results

Here is an example of the result using a custom dataset collected for the work titled “Vision” currently showing at the MMCA. Since the custom dataset dosen’t really have a global/coarse property like ‘pose’ , you can see that the changes in the images within each sample are not very apparent .